Why Music Producers Should Play An Instrument

Should music producers know how to play an instrument? Harry Levin explores the benefits of learning how to play an instrument.

How Playing an Instrument Can Improve Your Productions

More young musicians are choosing the electronic music production route these days. However, every artist should also consider learning an instrument. Having experience in playing an instrument opens up new avenues of thought surrounding music. It also helps you understand music theory and other essential concepts.

Developing this musical knowledge is essential and will help you become a better music producer. You’ll be able to better communicate with musicians, produce more well-rounded music, and have a smoother production process. As a result, you can gain more opportunities as a producer in the music industry.

However, learning how to play an instrument is not required. Several talented music producers create amazing music without knowing how to play an instrument. Although, knowing how to play an instrument is beneficial for several reasons.

Why Learning Piano Helps with Music Production

At most electronic production schools, the curriculum emphasizes on students having basic knowledge of keys (piano, keyboard, etc.). Piano is the best instrument for learning music theory, motor skills, ear training, melodic and harmonic development, and more. Music producers also use MIDI keyboards and the piano roll in their DAW to develop musical ideas.

The piano is also the simplest instrument when it comes to generating ideas. You don’t need advanced knowledge to mess around and come up with new ideas for a song. All 88 keys are before you like the letters on a computer keyboard. With two hands, someone can play melody, harmony, and countermelody at the same time.

Why Learning A Single Voice Instrument Helps with Music Production

Knowing music theory and producing ideas with the piano are valuable skills. However, students are not gaining the most profound knowledge an instrument can provide. This knowledge relates to the human aspects of music, such as motion and listening. To understand these aspects, it’s best to learn a single voice instrument.

Single voice instruments can only produce one voice at a time like winds and strings. Trumpet, trombone, violin, and cello are examples of single voice instruments. Unlike the piano, you cannot play overlapping rhythms on single voice instruments. They can only produce one idea at a time. This limitation pushes creativity and teaches players different lessons about music. With fewer options, you must think of inventive ways to create.

For music producers, playing one rhythm at a time might seem unnecessary. After all, electronic music tracks have a lot of overlapping layers. A full song consists of several instruments, samples, drum patterns, effects, and other elements.

Yet, learning a single voice instrument can still improve your music productions. For example, it forces you to focus on different aspects of music other than forming a product.

Learning A Single Voice Instrument

An essential part of learning a single voice instrument is physical technique. Playing these instruments is where motion and music meet. They need your input to produce sound. For example, there has to be a constant connection via your breath or hands. That’s why practicing a single voice instrument seems closer to physical training than book learning.

When you practice a single voice instrument, you are practicing technique more than studying facts. It’s comparable to weight lifting. For example, you have to bench press using proper technique countless times to see results in your muscles. Similarly, you have to practice the same techniques on a single voice instrument countless times before you produce the best sound possible. Whether that technique involves moving a bow across the strings of a violin or blowing air through a trumpet.

This approach is why the idea of practicing an instrument for twelve hours straight is impossible. After a long practice session on an instrument, you’re both physically and mentally exhausted. Conversely, producing music for the same amount of time takes little physical exertion. However, with time and dedication, you’ll develop far more along the way when learning how to play the instrument. For example, your brain will associate physical and sonic output as you improve at playing an instrument. You also become connected to the music in a way working on a computer can’t match. Motion and music become intertwined.

This awareness of motion and music doesn’t go away either. It’s what helps you learn other instruments fast. Your brain knows what sounds good, and your body knows how to make something sound good. Whether it’s holding a bow, touching keys, or blowing through a mouthpiece. That’s how musicians like Stevie Wonder and Kevin Parker can play all the instruments on their albums.

The Relationship Between Movement and Music

The awareness of movement and music goes beyond yourself. Knowing this will help you make others move through your music. That’s because it takes more than a strong beat or other rhythmic elements to make people dance to a song.

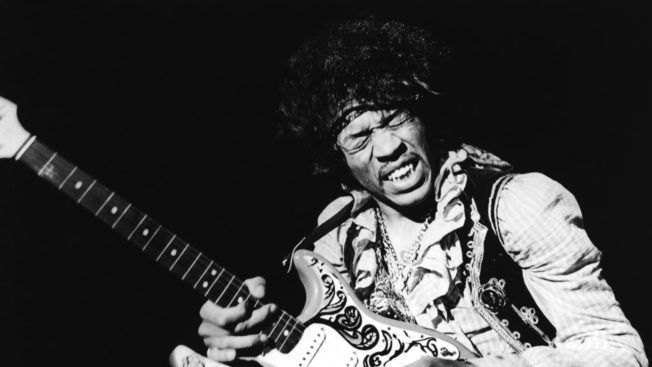

That’s why certain tracks make crowds go crazy, and others fall flat even if they sound similar. For example, when you watch Jimi Hendrix play guitar or John Coltrane play the saxophone, they never stand still. They aren’t locked into the beat either. Instead, they are moving to the music as they create it, and the audience follows along.

Someone in the audience could guess why this movement is happening. But you can only understand why when you have experienced this reaction yourself. For instance, when you play an instrument, you reach a middle point between consciousness and unconsciousness. Your brain is in control, but you also trust your instincts at every turn.

No one can replicate this physical state on a computer because playing an instrument is a human process. Quantization and programming are not human, they’re mechanical. They can make your music sound static and robotic. Music which lacks a groove can also feel like something is missing. Groove triggers a physical reaction that causes people to move to the music.

This effect is why groove plugins like Humanizer exist. Music producers use various plugins and production techniques to humanize programmed music. Whereas with instruments, each moment is imperfect. There’s no need for these programs because everything happening is human. The best music reflects the imperfect human experience through sound. Those imprecise moments are what cause this instinctual movement.

The more experienced you are at playing an instrument, the better you can recreate this physical reaction. It doesn’t matter if it’s with an instrument or a computer loaded with Ableton Live. For example, have you ever created a track that made your body move? That’s the same instinctual feeling as if you were playing an instrument in the moment. If the sound creates an instinctual feeling in you, then it will do the same to your audience. And if your tracks get the audience moving, then they’re doing their job.

Listening and Creating at the Same Time

When you’re producing music, you create, then listen. For example, you add an effect, listen to it, and then make a decision. However, when playing an instrument, you have to listen and create at once. You place equal focus on what you’re playing and what your hearing.

By doing so, you hear what you’re playing as an artist and a spectator. It makes you inherently detached from what you’re creating. As an artist, you become attached to the music you create. It’s your music, after all.

However, if you plan on being a professional artist, you must learn how to make music for other people. You have to be conscious of what they are hearing. No one buys tickets to the concert in your room, and no one streams music they don’t like. By learning an instrument, you develop a more critical ear.

This approach is essential to being a successful artist. The audience is often critical, especially now with social media. If you maintain a critical ear and hold yourself to a high standard, your music will be better. Making music will also be more satisfying.

In Conclusion

Playing an instrument is much different compared to producing music on a computer. Learning how to play an instrument teaches you lessons about music beyond what you program or master in a DAW. Playing an instrument also requires hands-on experience to understand. Since the experience is theoretical and emotional, you can apply it to other pursuits within music. This includes music production.